Mmmmmmmmm. Data.

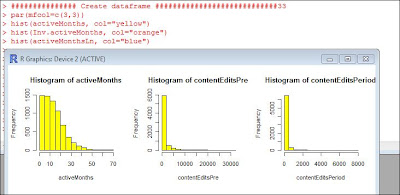

I am updating my description of Data Analysis for Spring semester. The course will be a bit different than it was, but in short, we will be doing projects, starting with collecting data using an online survey, recoding data using excel formulas, doing simple analyses in excel, and then working with larger scale datasets in R. Throughout we will work on learning how to work with data, perform standard analyses, and understand what the analysis means. Everyone will do actual factual projects using those larger and very real datasets.

Here are some details that you should know about:

You should buy a copy of "Discovering Statistics Using R". In fact, you should get it as a "Christmas Present" or any other seasonally defined gift for yourself. I know, what could be more exciting? Not much!

Here are lectures on Stats from the author of the book, Andy Field.

https://www.youtube.com/user/ProfAndyField

Add them to your late list of youtube infotainment options.

There are some online resources related to the book: http://www.sagepub.com/dsur/study/default.htm

I am not sure if I am going to want to use any of those, but thought you might want to know that they are there.

I will be updating the content of our course, the schedule, and the assignments.

https://sites.google.com/site/professorwelser/courses/data-analysis